Cloudera Data Platform Private Cloud Base is the on-premises version of the Cloudera Data Platform. This new offering combines the best of Cloudera Enterprise Data Hub and Hortonworks Data Platform Enterprise, as well as new features and enhancements throughout the stack. This unified distribution is a scalable and adaptable platform on which to securely run a wide range of workloads.

CDP Private Cloud Base supports a variety of hybrid solutions, including workloads created with CDP Private Cloud Experiences, in which compute tasks are separated from data storage and data can be accessed from remote clusters. By managing storage, table schema, authentication, authorization, and governance, this hybrid approach serves as a foundation for containerized applications.

Benefits

The following are the advantages of separating Hadoop cluster hosts (master hosts, utility hosts, gateway hosts, or worker hosts) from storage hosts (HDFS Transparency NameNodes and DataNodes):

The Hadoop and storage layers can be managed independently and by different teams.

Because IBM Spectrum Scale is not installed on the Hadoop cluster hosts, specific Kernel levels are not required.

Only the IBM Spectrum Scale hosts must have the same

uid/gidvalue.Only IBM Spectrum Scale requires password-less ssh on all nodes for either a root or a non-root user with

sudoprivileges.

Verify Installation of Spectrum Scale

The command mmlscluster output shows that only the first gpfs node has assigned the role of manager and quorum node.

/usr/lpp/mmfs/bin/mmlscluster

GPFS cluster information

========================

GPFS cluster name: gpfs01

GPFS cluster id: 14526312809412325839

GPFS UID domain: gpfs01

Remote shell command: /usr/bin/ssh

Remote file copy command: /usr/bin/scp

Repository type: CCR

Node Daemon node name IP address Admin node name Designation

--------------------------------------------------------------------

1 gpfs01 172.17.0.101 gpfs01 quorum-manager

2 gpfs02 172.17.0.102 gpfs02

Filesystem fs_gpfs01 is composed of two network-shared disks.

/usr/lpp/mmfs/bin/mmlsnsd -a

File system Disk name NSD servers

---------------------------------------------------------------------------

fs_gpfs01 mynsd1 (directly attached)

fs_gpfs01 mynsd2 (directly attached)

To avoid split brain, GPFS, like many other cluster software's, requires that the majority of quorum nodes be online to use the filesystem. Because the cluster has an even number of cluster nodes in this case, one or more tie-breaker disks must be defined.

Access Control on Spectrum Scale

Ensure that the IBM Spectrum Scale file system ACL setting is set to ALL

/usr/lpp/mmfs/bin/mmlsfs fs_gpfs01 -k

flag value description

----- ----------- ----------------------------

-k nfs4 ACL semantics in effect

fs_gpfs01 is the filesystem configured?

Installing IBM Spectrum Scale on the Cloudera Data Platform

IBM Spectrum Scale, also known as GPFS, is a high-performance, scalable file system that can be used to manage large amounts of data across a cluster of servers. It is often used in environments where data must be accessed by multiple users and applications simultaneously, and where data must be stored in a highly available and durable manner.

Installing IBM Spectrum Scale on Cloudera Data Platform (CDP) 7 involves several steps. Here is a high-level overview of the process:

Obtain the necessary software:

Download the IBM Spectrum Scale software from the IBM website.

Download the Cloudera Data Platform (CDP) 7 software from the Cloudera website.

Install IBM Spectrum Scale:

- Follow the instructions provided by IBM to install and configure IBM Spectrum Scale on your cluster. This will likely involve installing the software on each node in the cluster, configuring the nodes to communicate with each other, and setting up the file system.

Install Cloudera Data Platform (CDP):

- Follow the instructions provided by Cloudera to install and configure CDP on your cluster. This will involve installing the CDP software on each node in the cluster, configuring the nodes to communicate with each other, and setting up the CDP environment.

Configure IBM Spectrum Scale for use with CDP:

- Once both IBM Spectrum Scale and CDP are installed and configured, you will need to integrate them so that CDP can use IBM Spectrum Scale as its underlying file system. This may involve configuring CDP to use IBM Spectrum Scale as the default file system or setting up specific CDP services or applications to use IBM Spectrum Scale.

It is important to carefully follow the installation and configuration instructions provided by both IBM and Cloudera to ensure that IBM Spectrum Scale is properly installed and configured on your CDP cluster.

Overview of CDP Private Cloud Base installation

Designate separate nodes for IBM Spectrum Scale CES HDFS and CDP Private Cloud Base clusters.

IBM Spectrum Scale

Set up the CES HDFS cluster:

Install the CES HDFS HA cluster.

If you need Kerberos, enable Kerberos on the CES HDFS cluster.

Verify the installation.

Set up the CDP Private Cloud Base cluster:

Install the Cloudera Manager.

Optional: Enable Kerberos on the Cloudera Manager.

Optional: Enable Auto-TLS on the Cloudera Manager (TLS is supported from - CDP Private Cloud Base 7.1.6).

Deploy IBM Spectrum Scale CSD and restart the Cloudera Manager.

Create a new CDP Private Cloud Base cluster. Select the IBM Spectrum Scale service along with Yarn, Zookeeper, and other services as needed.

Enable NameNode HA in the IBM Spectrum Scale service and restart all the services.

Verify the installation.

Create CDP Users and groups

Cloudera Manager creates Hadoop users and groups for all managed hosts that correspond to the services when you register hosts with Cloudera Manager. These users and groups must be manually created before registering the IBM Spectrum Scale HDFS Transparency hosts with Cloudera Manager. Because IBM Spectrum Scale is a POSIX file system, any common system user or group must have the same UID and GID on all IBM Spectrum Scale nodes.

Users and groups for Hadoop users on your HDFS Transparency nodes should have consistent UID and GID across all HDFS Transparency nodes if you are using a Windows AD or LDAP-based network.

We need to create CDP Private Cloud Base users and groups in the IBM Spectrum scale, as shown in the image below. But I've done it without the step before, and after learning about it, I re-ran the script. Because some missing users were created, I would recommend doing so.

Creating users and groups across Spectrum Scale nodes.

/usr/lpp/mmfs/hadoop/scripts/gpfs_create_hadoop_users_dirs.py --create-users-and-groups --hadoop-hosts nn01.gpfs.net,nn02.gpfs.net,dn01.gpfs.net,dn02.gpfs.net,dn03.gpfs.net

Verify creating users and groups.

/usr/lpp/mmfs/hadoop/scripts/gpfs_create_hadoop_users_dirs.py --verify-users-and-groups --hadoop-hosts nn01.gpfs.net,nn02.gpfs.net,dn01.gpfs.net,dn02.gpfs.net,dn03.gpfs.net

[ Verification successful. ]

Get nodes on a cluster by the below command.

mmlscluster | awk '!n && /Daemon/{ n=1; next } n && nn--' | awk '{ print $2 }' | paste -sd, -

nn01.gpfs.net,nn02.gpfs.net,dn01.gpfs.net,dn02.gpfs.net,dn03.gpfs.net,

(remove the last comma from the output)

Installing Cloudera Manager

We must first stop the HDFS Transparency services on the Spectrum scale nodes before installing the Cloudera manager.

- Stop the HDFS Transparency services

/usr/lpp/mmfs/hadoop/sbin/mmhdfs hdfs-dn stop

/usr/lpp/mmfs/bin/mmces service stop HDFS -a

Installation document

https://www.ibm.com/docs/en/spectrum-scale-bda?topic=installing-cloudera-data-platform-private-cloud-base-spectrum-scale

Make sure you run the below commands before installing Spectrum scale.

First, stop the daemons on the CES cluster

/usr/lpp/mmfs/hadoop/sbin/mmhdfs hdfs-dn stop

/usr/lpp/mmfs/bin/mmces service stop HDFS -a

/usr/lpp/mmfs/hadoop/bin/mmces state show CES -a

NODE CES

------------------------------- -----

gpfs01 HEALTHY

gpfs02 FAILED

In case any node is in FAILED state, use the below commands to start.

/usr/lpp/mmfs/hadoop/sbin/mmhdfs hdfs-dn stop

/usr/lpp/mmfs/bin/mmces service stop HDFS -a

Install Cloudera Manager

Its always better to use the updated documentation from Cloudera

CDP Private Cloud Base Installation Guide

I had configured a local repository (using httpd) on one of the nodes.

Step 2: Install Java Development Kit

Step 3: Install Cloudera Manager Packages

sudo yum install cloudera-manager-daemons cloudera-manager-agent cloudera-manager-server -y

- Step 4. Install and Configure Databases

Run scm_prepare_database.sh to create the Cloudera database.

sudo /opt/cloudera/cm/schema/scm_prepare_database.sh [options] <databaseType> <databaseName> <databaseUser> <password>

Auto TLS on Cloudera Manager

Use case 2: Enabling Auto-TLS with an intermediate CA signed by an existing Root CA

There are three steps to this process. To begin, instruct Cloudera Manager to generate a Certificate Signing Request (CSR). Second, have the CSR signed by the Certificate Authority of the company (CA). Third, provide the signed certificate chain to complete the Auto-TLS configuration. The three steps are illustrated in the following example.

- Before starting Cloudera Manager, initialize the

certmanagerwith the-stop-at-csroption:

JAVA HOME=/usr/lib/jvm/java-1.8.0-openjdk; /opt/cloudera/cm-agent/bin/certmanager -location /var/lib/cloudera-scm-server/certmanager setup -configure-services -stop-at-csr

We will ask the Active Directory team to sign the CSR (make sure to have it)

This creates a Certificate Signing Request (CSR) file called ca_csr.pem in /var/lib/cloudera-scm-server/certmanager/CMCA/private

Video: Certificate should be of x.509 format

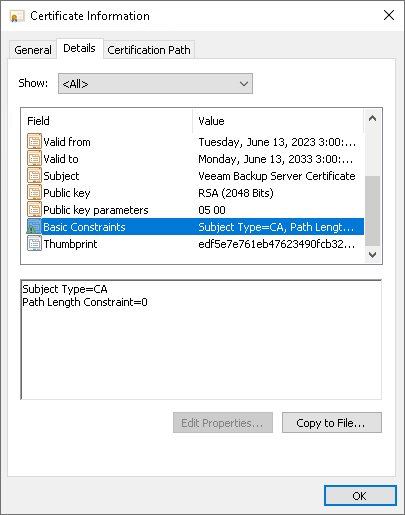

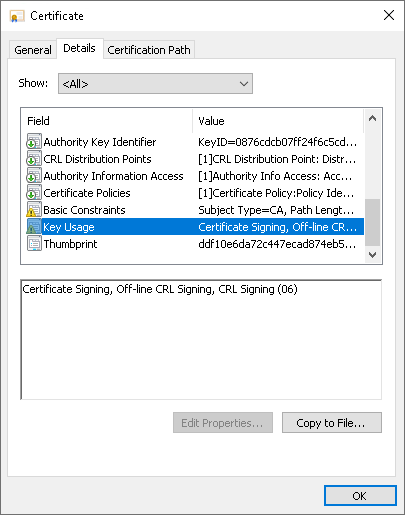

We need an Intermediate/Subordinate CA Signing certificate (signed certificate using Sub-Ordinate CA template ). We can validate by opening the signed certificate's Details Tab and check:

**Basic Constraints** --> SubjectType=CA

**Key Usage** --> Digital Signature, Certificate Signing, Off-line CRL Signing, CRL Signing (86)

Convert to cer format if in p7b format

openssl pkcs7 -print_certs -in ca_cdp_sa.p7b -out ca_cdp_chain.cer

Convert certificate to pem format

openssl x509 -inform der -in ad_cert.cer -out ad_cert.pem

Finally,

JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk;

/opt/cloudera/cm-agent/bin/certmanager --location

/var/lib/cloudera-scm-server/certmanager setup

--configure-services --trusted-ca-certs ca-certs.pem

--signed-ca-cert=cm_cert_chain.pem.

in Active Directory

We need to add a role in Active Directory and upload ca_csr.pem (private key) into the wizard. This generates

| Role service | Description |

| Certification Authority (CA) | Root and subordinate CAs are used to issue certificates to users, computers, and services, and to manage certificate validity. |

Enable Kerberos on Cloudera Manager

We need to enable Kerberos on Cloudera Manager before we can install IBM Spectrum Scale on the CDP

Deploy the IBM Spectrum Scale CSD

From the IBM Spectrum Scale cluster, get the gpfs.hdfs.cloudera.cdp.csd-<version-number>.noarch.rpm

## Find Package on Transperency Nodes

find / -name gpfs.hdfs.cloudera.cdp.csd-*.noarch.rpm

## Install RPM

rpm -ivh /root/gpfs.hdfs.cloudera.cdp.csd-<version-number>.noarch.rpm

## Restart Cloudera Manager

systemctl restart cloudera-scm-server

and copy it to the Cloudera Manager node.

Install IBM Spectrum Scale

Ensure the CES HDFS NameNodes and DataNodes are not running.

/usr/lpp/mmfs/hadoop/sbin/mmhdfs hdfs status

Now create Cluster in Cloudera Manager. Add Hosts to Cluster

Add DataNodes

In the IBM Spectrum Scale service installation wizard, perform the following:

Assign the NameNode and DataNode roles based on the actual CES HDFS NameNode and DataNode hosts.

Assign the Gateway roles to one or more CDP Private Cloud Base nodes. Assigning these roles to help in creating the HDFS client config

XMLunder/etc/hadoop. These MLS are required to run the HDFS client commands.Set the following IBM Spectrum Scale parameters:

default_fs_nametohdfs://yourClusterName:8020webhdfs_urltohttp://yourClusterName:50070/webhdfs/v1transparency.namenode.http.portto50070. This is the default NameNode JMX metrics port.transparency.datanode.http.portto1006. This is the DataNode JMX metrics port. The default value is9864if Kerberos is disabled and1006if Kerberos is enabled.